Strategy to Transition from Commercial LLMs to Open-Source Models

Client Situation

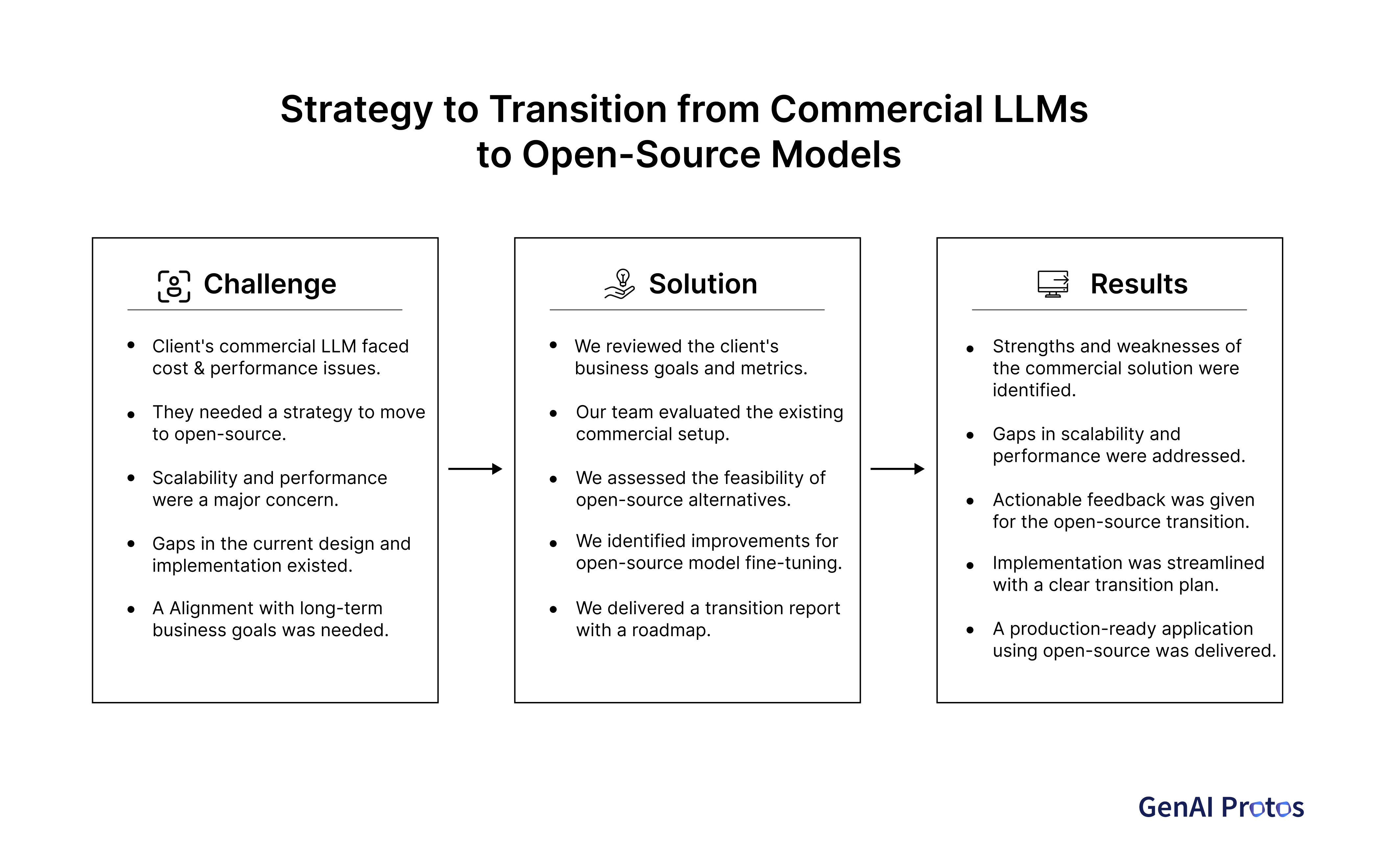

An enterprise was running their LLM-based AI applications on commercial models like ChatGPT. While the solution delivered functional results, the client faced increasing challenges related to:

- High operational costs due to usage-based pricing for commercial models.

- Latency issues that impacted real-time performance and user experience.

- Concerns around accuracy and customization to meet specific business needs.

- Long-term sustainability and control over the models they were using.

The client sought our expertise to conduct a feasibility study and develop a strategy to transition from commercial models to small, open-source LLMs for improved cost efficiency, lower latency, and better optimization.

Our Advisory

Our team provided a structured strategy and feasibility study to address the client’s challenges:

- Requirement Analysis

- Reviewed the existing LLM applications, workloads, and performance benchmarks.

- Identified critical business needs, including response accuracy, real-time performance, and cost constraints.

- Open-Source Model Assessment:

- Evaluated multiple open-source LLMs (e.g., LLaMA, GPT-NeoX, Falcon, and Bloom) based on parameters like cost, latency, accuracy, scalability, and customizability.

- Conducted model benchmarking to compare results against the current commercial solution.

- Infrastructure and Deployment Strategy:

- Designed an architecture for deploying small, fine-tuned open-source models using cost-efficient cloud infrastructure.

- Proposed GPU/accelerator utilization strategies to ensure low-latency inference at scale.

- Cost Optimization Analysis:

- Provided a detailed cost-benefit analysis, showcasing potential savings from switching to open-source models.

- Suggested approaches for fine-tuning models to improve accuracy while reducing computational costs.

- Implementation Roadmap:

- Delivered a step-by-step transition plan, including proof-of-concept (POC), pilot testing, and production deployment phases.

- Ensured guidelines for data privacy, security, and model governance were included in the strategy.

Outcome

- The client received a clear, actionable feasibility report and transition strategy tailored to their business needs.

- Identified suitable open-source LLMs that matched or exceeded accuracy and latency benchmarks at a significantly lower cost.

- Reduced projected operational costs by up to 60% through the adoption of open-source models.

- Developed an optimized deployment plan to ensure low-latency, real-time performance while maintaining control over model customization.

- Enabled the client to achieve a more scalable, sustainable, and cost-effective AI infrastructure without compromising on functionality.

Client Testimonial

“GenAI Protos delivered a clear strategy to transition our LLM workloads to open-source models. Their expertise in benchmarking and cost analysis helped us reduce costs significantly while improving performance and control over our AI systems.”